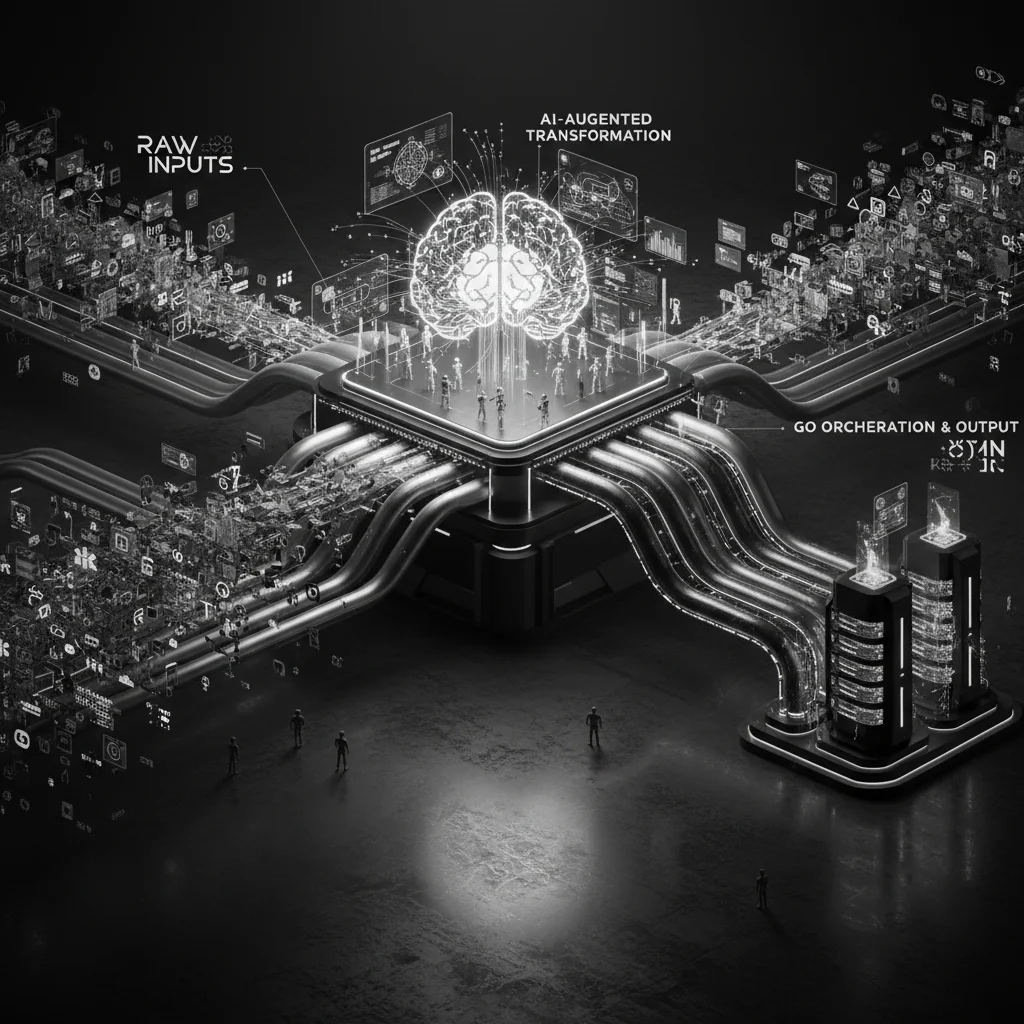

AI-Augmented ETL: Designing Agentic Data Pipelines Across Languages

Traditional Extract, Transform, Load (ETL) pipelines are rigid. They rely on strict schemas, regular expressions, and hard-coded logic. If the input data format changes slightly or requires semantic understanding (like extracting sentiment from a review or summarizing a chaotic PDF), traditional pipelines break.

Enter Agentic ETL. By embedding AI agents into the transformation layer, we can build pipelines that reason, adapt, and handle unstructured data with near-human comprehension. However, LLMs are slow and non-deterministic compared to compiled code.

This post explores a pragmatic, polyglot architecture: using Go for high-performance orchestration and I/O, and Python for the AI reasoning layer, ensuring a balance between speed, scalability, and intelligence.

The Architecture: The Muscle and The Brain

To build a production-grade Agentic pipeline, we separate concerns based on language strengths:

- The Muscle (Go): Handles concurrency, network I/O, retries, and orchestration. Go is excellent for moving data from A to B efficiently.

- The Brain (Python): Handles the AI logic. Python has the richest ecosystem for AI (LangChain, OpenAI SDK, PyTorch).

Connecting these two worlds (via gRPC or REST) allows us to scale the I/O independantly of the GPU/API-heavy inference.

Step 1: The Go Orchestrator

The Go component acts as the pipeline controller. It fetches data, manages state, and delegates complex reasoning tasks to the Python agents.

Here is an example of a Go worker that fetches a raw document and requests an AI extraction:

type Document struct {

ID string

Content string

}

// ProcessDocument handles the lifecycle of a single ETL task

func ProcessDocument(doc Document) error {

// 1. Pre-processing (Fast, deterministic)

cleanContent := sanitizeString(doc.Content)

// 2. Call Python Agent for Semantic Extraction (Slow, AI-driven)

// Using a hypothetical gRPC client wrapper

extraction, err := agentClient.ExtractEntities(context.Background(), &pb.ExtractRequest{

Text: cleanContent,

})

if err != nil {

// Handle retries or circuit breaking here

return fmt.Errorf("agent failure: %w", err)

}

// 3. Load to Database

return database.Save(doc.ID, extraction)

}

Step 2: The Python Reasoning Agent

On the Python side, we implement the agent. This isn't just an LLM call; it's a system with structured outputs and validation. Raw text from an LLM is useless for ETL; we need valid JSON.

We can use libraries like Pydantic or Instructor to force the LLM to return structured data.

from pydantic import BaseModel, Field

from typing import List

import openai

import instructor

# Define the target schema for the ETL step

class ProductInfo(BaseModel):

product_name: str

sentiment: str = Field(description="Customer sentiment: POSITIVE, NEGATIVE, or NEUTRAL")

features_mentioned: List[str]

# Patch OpenAI client for structured output

client = instructor.from_openai(openai.OpenAI())

def extract_entities(text: str) -> ProductInfo:

"""

Acts as the transformation layer, converting unstructured text

to a strict schema.

"""

try:

return client.chat.completions.create(

model="gpt-4-turbo",

response_model=ProductInfo,

messages=[

{"role": "system", "content": "You are a data extraction agent."},

{"role": "user", "content": f"Analyze this text: {text}"},

],

)

except Exception as e:

# Log the failure for auditability

print(f"Validation failed: {e}")

raise

Step 3: Guardrails and Auditability

Integrating non-deterministic AI into deterministic pipelines requires strict guardrails. You cannot trust the LLM blindly.

1. Structural Validation

In the Python snippet above, if the LLM returns invalid JSON or misses a field, the Pydantic model raises a validation error. The Go orchestrator catches this and can decide to retry (perhaps with a stronger model) or dead-letter the message.

2. Semantic Guardrails

Beyond structure, check for logic. If the sentiment field contains "ANGRY" but the schema only allows "POSITIVE/NEGATIVE/NEUTRAL", the pipeline should flag it. Tools like Guardrails AI can enforce these constraints before the data ever leaves the Python service.

3. The Audit Log

Reproducibility is the hardest part of AI ETL. Unlike code, the same input to an LLM might yield different outputs. You must log:

- The Prompt sent to the model.

- The Model Version (e.g.,

gpt-4-0613rather than justgpt-4). - The Raw Output before parsing.

Conclusion

By leveraging Go for the heavy lifting and Python for the cognitive load, you create an Agentic ETL pipeline that is both robust and intelligent. This architecture allows you to iterate on prompts and models in Python without destabilizing the high-throughput infrastructure running in Go.