MCPs versus APIs: The Blueprint for AI Connectivity

As a junior developer, you are likely already familiar with APIs (Application Programming Interfaces). They are the glue that holds the modern internet together, allowing your frontend to talk to your backend, or your app to talk to Stripe and Google Maps.

However, with the rise of Large Language Models (LLMs) and AI agents, a new standard has emerged: the Model Context Protocol (MCP).

You might be asking: "Why do we need a new protocol? Can't AI just use APIs?"

In this post, we'll explore what MCPs are, how they differ from standard APIs, and why they are becoming the standard for connecting AI agents to the real world.

The Status Quo: APIs

Traditionally, an API is a rigid contract. If you want to use the GitHub API to get a list of issues, you need to read the documentation, understand the specific endpoint (GET /repos/{owner}/{repo}/issues), manage authentication headers, and parse the specific JSON schema returned.

Code for consuming an API usually looks like this:

import requests

def get_weather(city):

api_key = "12345"

url = f"https://api.weather.com/v1/current?q={city}&key={api_key}"

response = requests.get(url)

return response.json()

This works great for traditional software. But for an AI agent, this is difficult. The AI doesn't inherently know that api.weather.com exists or how to format the URL unless it has been specifically trained on that API's documentation or provided with a very specific tool definition.

What is an MCP?

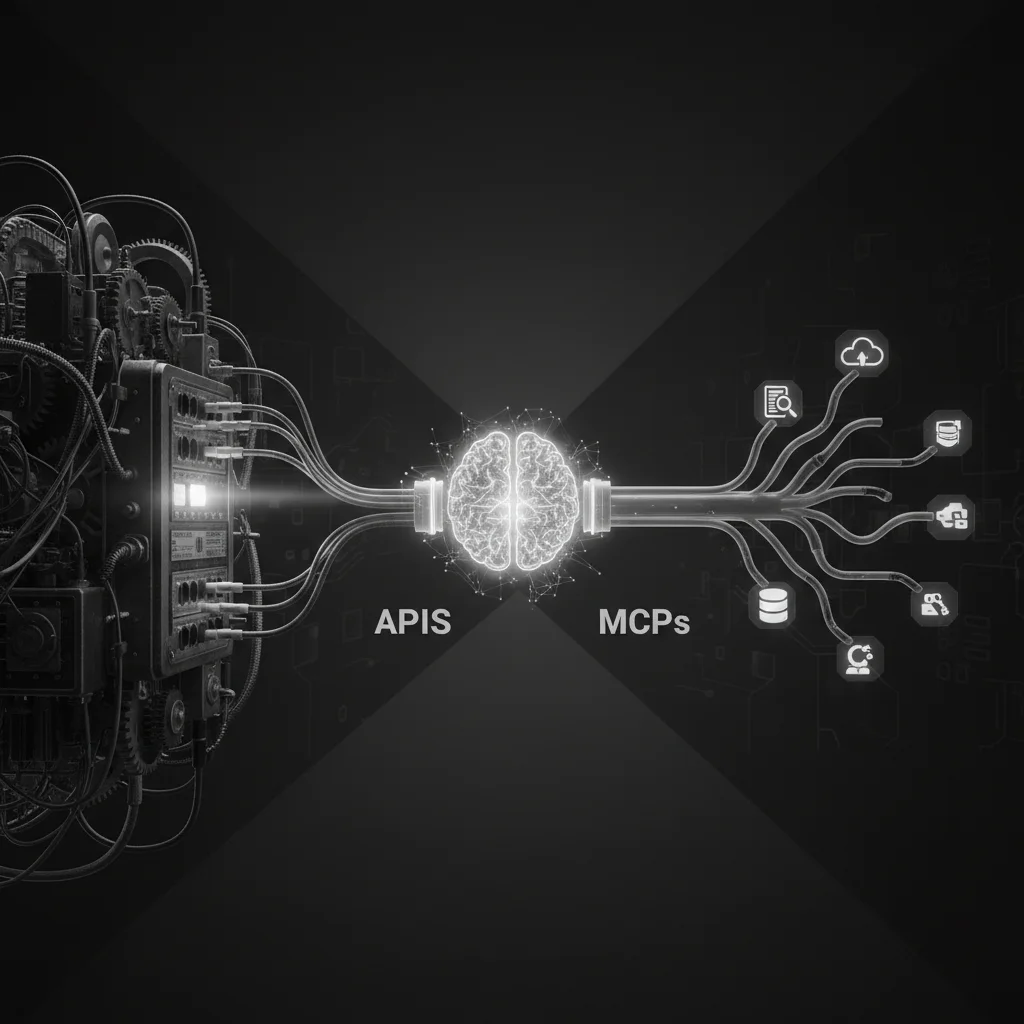

The Model Context Protocol (MCP) is an open standard that standardizes how AI models interact with external data and tools. Think of it like a USB port for AI.

Instead of building a custom integration for every single data source (like Google Drive, Slack, or a Postgres database) for every single AI client (like Claude, ChatGPT, or an IDE), MCP provides a universal language.

The Architecture

MCP uses a client-host-server architecture:

- MCP Host: The application running the AI (e.g., the Claude Desktop app or an IDE like Cursor).

- MCP Client: The connector inside the host.

- MCP Server: A lightweight program that exposes data and tools.

Key Differences: APIs vs. MCPs

While MCP servers might use APIs under the hood to fetch data, the way they expose that data to the AI is fundamentally different.

1. Discovery vs. Hard-coding

- API: You hard-code endpoints in your application.

- MCP: The AI discovers capabilities. When an MCP server connects to an AI host, it says, "Here are the resources I have, here are the prompts I know, and here are the tools I can execute."

2. Context vs. Data Transfer

- API: Focuses on sending and receiving raw data.

- MCP: Focuses on providing context. It exposes Resources (data the AI can read, like files or logs) and Prompts (templates for AI interactions) alongside executable tools.

3. The "N x M" Problem

Without MCP, if you have 3 different AI tools and 5 different data sources, you need to write 15 different integrations. With MCP, you write one MCP server for your data source, and any MCP-compliant AI client can use it instantly.

More Than Just a Wrapper

You might think, "Isn't an MCP just a wrapper around an API?"

Technically, yes, an MCP server often wraps an API. However, it adds a layer of abstraction specifically designed for LLMs. It turns an API from something programmatic into something semantic.

Here is how you might define a tool in a Python MCP server. Notice how we use docstrings—the AI actually reads these to understand when and how to use the tool.

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("Weather Service")

@mcp.tool()

def get_forecast(city: str) -> str:

"""

Get the weather forecast for a specific city.

Use this tool when the user asks about weather conditions.

"""

# Logic to call the actual external API goes here

return f"The weather in {city} is sunny and 75 degrees."

In a standard API, the docstring is for the developer. In MCP, the docstring is part of the protocol payload sent to the AI model so it knows how to behave.

Why MCPs Are Exploding in Popularity

The rise of AI Agents is the main driver behind MCP adoption. Agents need to perform actions: query databases, read local files, or deploy code.

Before MCP, developers had to manually paste context into chat windows or build fragile custom plugins. Now, developers can run a local MCP server that connects their local database directly to their AI chat interface securely.

Summary

- APIs are for software-to-software communication with rigid structures.

- MCPs are for AI-to-Software communication with discoverable, context-aware structures.

- MCPs are not just API wrappers; they standardize prompts, resources, and tools to solve the interoperability problem between different AI models and data sources.

As a junior developer, learning how to build a simple MCP server is a great way to future-proof your skills in the age of AI engineering.